We can import sklearn cosine similarity function from sklearn.metrics.pairwise. It will calculate the cosine similarity between two NumPy arrays. In this article, We will implement cosine similarity step by step.

sklearn cosine similarity: Python –

Suppose you have two documents of different sizes. Now how you will compare both documents or find similarities between them? Cosine Similarity is a metric that allows you to measure the similarity of the documents.

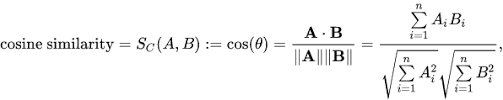

The formulae for finding the cosine similarity is the below.

We will implement this function in various small steps. Let’s start.

Step 1: Importing package –

Firstly, In this step, We will import cosine_similarity module from sklearn.metrics.pairwise package. Here will also import NumPy module for array creation. Here is the syntax for this.

from sklearn.metrics.pairwise import cosine_similarity

import numpy as npStep 2: Vector Creation –

Secondly, In order to demonstrate the cosine similarity function, we need vectors. Here vectors are NumPy arrays. Let’s create NumPy array.

array_vec_1 = np.array([[12,41,60,11,21]])

array_vec_2 = np.array([[40,11,04,11,14]]) Step 3: Cosine Similarity-

Finally, Once we have vectors, We can call cosine_similarity() by passing both vectors. It will calculate the cosine similarity between these two. It will be a value between [0,1]. If it is 0 then both vectors are completely different. But in the place of that, if it is 1, It will be completely similar.

cosine_similarity(array_vec_1 , array_vec_2)Complete code with output-

Let’s put the code from each step together. Here it is-

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

array_vec_1 = np.array([[12,41,60,11,21]])

array_vec_2 = np.array([[40,11,4,11,14]])

print(cosine_similarity(array_vec_1, array_vec_2))

Here we have used two different vectors. After applying this function, We got a cosine similarity of around 0.45227 . Which signifies that it is not very similar and not very different. In Actually scenario, We use text embedding as NumPy vectors. We can use TF-IDF, Count vectorizer, FastText or bert, etc for an embedding generation.

Conclusion –

cosine similarity is one of the best ways to judge or measure the similarity between documents. Irrespective of the size, This similarity measurement tool works fine. We can also implement this without sklearn module. But It will be a more tedious task. Sklearn simplifies this. I hope this article, must have cleared implementation. Still, if you found, any information gaps. Please let us know. You may also comment below.

Frequently Asked Questions

1. What is cosine similarity?

Cosine similarity is used in information retrieval and text mining. It calculates the similarity between two vectors. If you have two documents and want to find the similarity between them you have to find the cosine angle between the two vectors to check similarity.

2. How does cosine similarity work?

Let’s say you have two documents. The best way to check whether both documents are similar or not is to find the cosine similarity between each document. Its value is between -1 and 1. If the value is 1 or close to 1 then both documents are the same and if it is close to -1 then the documents are not the same.

Suppose you have a large number of documents then using the information of cosine similarity you can cluster documents. This helps you to find relevant information about documents.

3. What are the applications of cosine similarity ?

There are many real life applications of cosine similarity. You can use it for information retrieval like similarity between documents. Below are the more examples on it.

1. Text Mining and Natural Language Processing (NLP)

2. Document Similarity and Plagiarism Detection

3. Image Similarity

4. Collaborative Filtering

5. Clustering and Dimensionality Reduction

6. Audio Analysis

Thanks

Data Science Learner Team

Join our list

Subscribe to our mailing list and get interesting stuff and updates to your email inbox.